Kubecon San Diego 2019 is already over, and just like last year, that was huge, this time with 12000 attendees even!

Here are the notes I took during the event, there’s a TL;DR version if you want to skip directly to the main takeaways and a different post specifically about Rejekts, the conference that happened even before Day 0

Day 0: Contributor Summit

Day 0 (usually the Monday) at Kubecon is a kind of specialized pre conference day: attendees can choose to attend any pre conference available (Cloud Native Storage Day, EnvoyCon, AWS Container Day, etc.). Most of them come at an additional fee (from 50$ to 500$ approximately), some are free though (included with main conference ticket).

I chose to attend the Kubernetes Contributor summit, open to not only active contributors, but to new contributors as well.

I really enjoyed it, the organizers really made a good job running beginner as well as expert tracks, on many aspects of the Kubernetes community (how to contribute, how to extend kubectl, status of the different SIGs, etc.)

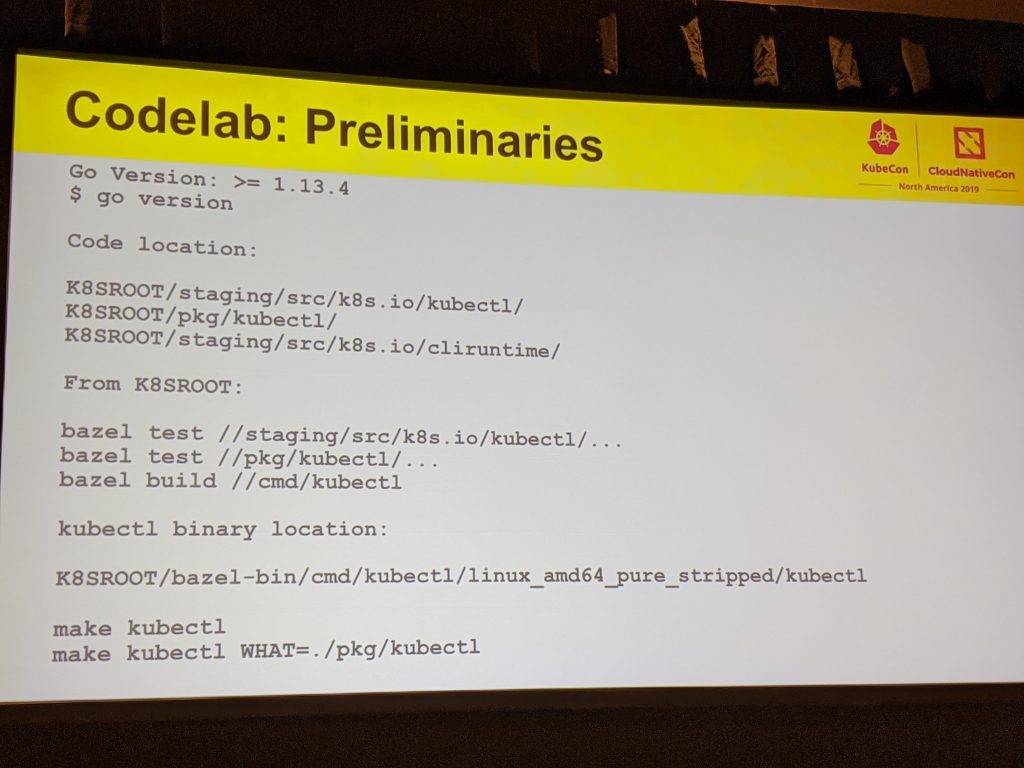

I started the day with a workshop on how to extend kubectl, by Sean Sullivan – you can actually try it out yourself, here are the instructions below:

and here is the diff of the workshop; you’ll be able to add a foo command to kubectl that will ingest some YAML to the API server – interesting thing to notice is the possibility to use either Bazel (great to make sure you’re using the proper version of Go) or make to build the modules.

I then visited the intermediate contributor track, where the speakers told us how to contribute PRs to Kubernetes, beginning by introducing the audience to the kubernetes/kubernetes (or k/k for insiders!) hierarchy, here are the slides. Most of the « action » happens in the staging/ sub directory nowadays.

Another talk introduced the audience to the CI infrastructure for the Kubernetes project: Testgrid and Prow. Did you know that when you submit a PR to the Kubernetes project, Github is configured not to let you assign reviewers? since Prow will find in the authors.yaml file who should be assigned to the PR.

Also got some info about the Git usage at Kubernetes: pretty usual: rebase on master, squash non interesting commits; cherry-picking is used in a special way though for porting bug fixes to branches.

Day 1, 2 and 3: Keynotes

The keynotes can be watched on the Youtube channel for Kubecon NA 2019

Day 1 Keynotes

Dan Kohn from CNCF based his keynote on a simple metaphor: in Mindcraft, you can assemble simple elements to create complex tools – the same way CNCF assembles simple elements (DNS, etcd, iptables that somehow evolved into eBPF) into a complete system: Kubernetes.

Cheryl Hung then got on stage and explained that there are now 500+ CNCF members, 100+ official partners.

Bryan Liles introduced to the audience the CNCF project updates. He started with CoreDNS: it was graduated in January 2019, itkeeps DNS simple.

Then Vitess, presented by Sugu, PlanetScale CEO, that just recently graduated. They took the example of Slack running all their MySQL with Vitess; and then the example of jd.com and another example with Nozzle, a startup that could easily migrate from Azure to Google cloud.

LinkerD, is in the incubator; targeting at mandatory TLS for all TCP traffic in 2020

Helm, more than 1M downloads per month, 29 project maintainers – it just celebrated its 3.0 release: tillerless – Helm 2 will still be supported for another year.

Open Policy Agent: a building block to enforce a wide range of policy across your project. It’s about decision making vs policy enforcement: the software asks what’s authorized and OPA allows or denies; the policy language is rego. The speaker also introduced to the audience WebAssembly components, in addition to OPA – you can now compile rego rules into WASM to speedup rules evaluation.

Cloudevents project was mentioned, a spec to send events (more on that in the session notes below)

Eric Boyd from Redhat, part of the storage SIG, talked about Rook. Rook provides orchestration for storage provider; helps out to deploy non cloud storage to K8s. Rook Ceph provides a consistent way to address storage in K8s clusters. The next evolution is « scale »; ie storage from cluster to cluster.

Derek Collison, CEO of Synadia, about the NATS project update: NATS (10 years old now, originally from CloudFoundry) is about messaging: services, event sourcing, streams, topics (including their observability and analytics) – even « cloud messaging ». Derek then mentioned few NATS users and integrations (even Spring boot, and kafka bridging) – and also monitoring integrated with Prometheus. To get started: you can get access to a live NATS system: free community servers demo.nats.io, or an easy 1 liner or via Docker run command.

What’s new in k8s 1.16?

- windows support: Active Directory is in beta

- Ephemeral containers: when kubectl isn’t enough: for example, a plugin that would allow attaching a debug container to an existing pod:

|

1 |

kubectl debug my-debug-container --image debian --attach |

- cloud providers: now moving out of tree

- 32k+ contributors employed by 1k+ companies

OpenTelemetry makes observability simple. There used to be a fragmentation of solutions, a lock in, with observability tools. Now, Open Census + Open Tracing join forces together in Open Telemetry: metrics and tracing simplified; collection & compatibility bridges, language support (Java, JS, Python, etc.)

Day 2 keynotes

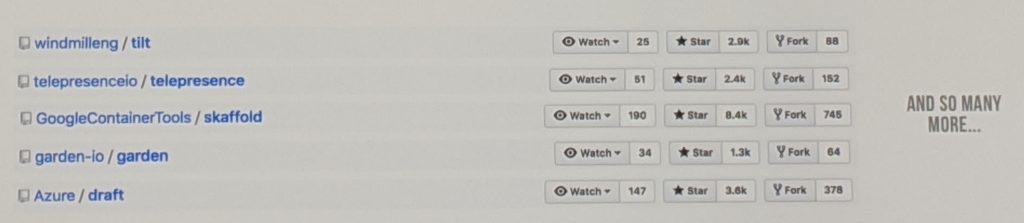

How can I remain an efficient developer with k8s? With tools?

How can I debug? with ephemeral containers better than with kubectl exec

Network, phase 2 by Cisco. Network service mesh (NSM): pod deployers can add an annotation to their pods to choose a previously created network (same as Container RuntimeClass that allows pods to specify with which container runtime they’re supposed to be run with)

IPv4/IPv6 talk by Tim and Kal: IPv6 was added on the late, but some first steps made k8s IPv6 compatible. But that’s not all: everything k8s is single IP; but in an ipv6 world, there can be several. How to take a singular field like IP to a plural field? Egress, ingress APIs had to be updated without breaking existing users. Now dual stack is supported for egress and ingress. It paves the way for network appliances integration.

Google celebrated Go 10 year old birthday; they also mentioned Agones, a kubernetes based OSS game server.

Day 3 Keynotes

Bryan Liles came back on stage, and introduced an interesting OSS tool from VMWare: Octant, to investigate what’s happening in a Kubernetes cluster.

He went on to describe how Ruby on Rails brought convention over configuration; he then described how K8s complexity is necessary complexity (multiple target, health checks, volume, etc).

He also recommended the usage of admission controllers.

Day 1, 2 and 3: Sessions

Introduction to Virtual Kubelet – Featuring Titus by Netflix – Ria Bhatia & Sargun Dhillon

It started from a Brian Burn idea, a typescript project, but has been rewritten in Go since then.

It does not replace the kubelet, it makes it more flexible.

A virtual kubelet registers itself as a Node, and can run pods.

It’s a library that needs to be filled by the provider.

Why the VirtualKubelet? to run your workloads outside of the existing cluster, and maybe even on IoT device.

It’s used by Titus (created back in 2015, leveraging Docker, Mesos – it is now OSS), a container orchestrator by Netflix.

Managing Helm Deployments with Gitops at CERN – Ricardo Rocha, CERN

Kubernetes at CERN: more than 400 k8s clusters, 1000s of nodes.

They are using gitops since everyone is familiar with git.

Their Helm repos are hosted on chartmuseum.

They use meta charts, that bundle several charts together.

To store secrets, they use helm-secrets and a similar one: helm-barbican

Argo Flux and gitops: git push and flux will know, rebuild the chart release and the helm operator and finally will redeploy it.

Jaeger Intro – Yuri Shkuro, Uber Technologies & Pavol Loffay, Red Hat

Jaeger graduated at CNCF end of October 2019.

Jaeger hotrod is an example app that was used during the talk, you can run it with Docker compose.

Modern distributed systems are complex.

Metrics show that something is wrong, but don’t explain why.

Logs are a mess: concurrent requests on multiple hosts.

Traces spans capture all the requests and responses that were generated.

Tracing allows you to correlate logs belonging to a given span. Comparison of spans help you find out what was different between a working request and a failing one.

Jaeger backend can aggregate traces from zipkin, open tracing or from opentelemetry.

Jaeger collector can injects traces to Kafka.

What’s new since last year? kubernetes operator, visual trace comparisons

Mario’s Adventures in Tekton Land – Vincent Demeester, Red Hat & Andrea Frittoli, IBM

Tekton is a set of components to run CI/CD in kubernetes.

With Tekton, everything is a CRD: the pipeline has tasks that are themselves decomposed into steps.

Building tekton using tekton, instead of kubernetes prow is under way: Tekton is getting independent from knative and Google cloud.

Jenkins X is based on top of Tekton; everything is re usable in Tekton.

Tekton does not yet support multi tenancy of projects in the same k8s cluster.

A Series of Fortunate CloudEvents – Ian Coffey, Salesforce

Events flowing everywhere, can we make intelligent decisions on what require events when and where.

What is a cloud event?

- A spec that adds a common format of metadata.

- Sane routing

- App logic slimmed down

- A framework that does not touch business logic

The specification of a cloud event requires : a version, a type (or namespace really), a source and an id.

Transport can be http, nats, amq.

Difficult parts: Event ordering, replaying, getting new sources.

There are dedicated SDK for most languages.

Use cases: CI/CD workflow, Cron based functions, k8s event job and also Knative eventing: trigger and broker.

Demo with knative event.

Kafka or NATS could provide durability since cloud events are stored only in memory. The apps simply listen, the filtering and the routing is done at the k8s level.

K9P: Kubernetes as 9P Files – Terin Stock, Cloudflare

Plan 9 from Bell Labs,old technology for networked filesystem.

K9p is the kubernetes API exposed as a 9p filesystem.

9p is a networked filesystem.

You must run k9p in its own process and use your client OS 9p implementation. There is native support in Linux and others, or you can use fuse on top!

grep, sed, tail are all supported to interact with your cluster.

There are Issues with getting automatic refresh (if a file is opened, you need to close it and reopen it to see changes).

Introducing Metal³: Kubernetes Native Bare Metal Host Management – Russell Bryant & Doug Hellmann

Metal³(metal cube) allows operators to provision a Kubernetes cluster with baremetal servers, that will boot and receive the necessary OS and configuration to join the Kubernetes cluster.

Why? It’s a K8s native API, and you can manage physical infra with k8s, in a self managed mode.

The Baremetalhost API exposes CRs that are representing the new available and provisionable nodes.

Ironic runs an os on ram to prepare the machine, then the Baremetalhost CR can be patched with an image of an OS.

K3s Under the Hood: Building a Product-grade Lightweight Kubernetes Distro – Darren Shepherd

K3s is a lightweight k8s distribution, composed of a single 50MB binary (memory req. 300MB), cncf certified, perfect for the edge but not only – it is production ready

K3s was extracted from Rio (the distribution on-top of k8s from rancher).

Things developed:

- a shim on top of etcd API to connect to sqlite, postgres, etc.

- a Tunnel proxy to ease worker connectivity to master

- Helm CRD included, as well as traefik, coredns, monitoring

K3s future: run everwhere (including windows)

Other projects: K3sup, k3d, for k3s in Docker

Inside Kubernetes Services – Dominik Tornow, Cisco & Andrew Chen, Google

A service directs traffic to a pod if it’s ready.

If it does not have a readiness probe, it will be considered ready if it’s alive; if it does not have a liveness probe, it will be considered alive as long as at least 1 container in the pod is running.

Service sits between pods and endpoints

kubeproxy is an edge controller running on each node and installs NAT rules.

Day 3: Workshops

Gitops workshop

With Gitops, your desired software system state is described in Git.

An agent ensures the live system is in the same state as defined in Git.

First Demo: poor man’s gitops: the agent is just kubectl apply, scheduled in a CronJob pod running every minute to reapply a web app deployment; that demo gave a good idea of what Gitops is about.

Then, we used Argo Flux (now partners) during the workshop

Instructions are available here.

The workshop is pretty quick to finish, try it out!

ServiceMesh for developers

This workshop was more ambitious than the previous one.

It was very interesting, with an onservability mindset.

Instructions are available here.

Operator workshop from RedHat

Very interesting, similar to last year workshop, but updated to match the latest versions of the operator framework

Instructions are available here.

In 2019, to build an operator, we have the choice between operator from Ansible, operator from Helm, or directly with Go (operator framework is based on kube builder) or even just defining some YAML, with KUDO!

Zero to Operator by Sully Ross

Unfortunately, I could not attend this one.

Here are the slides though, and the workshop instructions.

Closing notes

Kubecon is no ordinary conference: like any conferences, it provides the attendee with keynotes (some sponsored, as indicated in the conference schedule), sessions (something like 20 tracks in parallel?!) but also other specialized conferences on Day 0 as well as special events such as the NewStack pancake breakfast (better come early – 7:15AM – if you want to attend that one, and eat pancakes!) or the diversity lunch (open to everyone, one of the best events in the conference, where you have the opportunity to lunch with a topic – observability, operator, etc. – specialist). Oh, and I haven’t mentioned all the Kubecon parties, where you can meet different speakers and network with attendees too!